Under all the plastic and glass of the device you are using to read this article sits a central processing unit or CPU. From the latest iPhone to the satellite orbiting in space, a CPU is an essential component in all modern day electronics. The processing power found in an iPhone X is 700 times faster than Apollo Guidance Computer (AGC) used during the Apollo missions. Let’s take a look at how the CPU has evolved over the years.

A Brief History of the Microprocessor

1968, Fairchild introduces the 3708 the first silicon-gate IC.

Project leader Federico Faggin and Tom Klein would be the first to implement silicon gate technology to develop the first commercialized microprocessor. Federico Fagging and Tom Klein worked at Fairchild R&D where silicon gate technology was developed. This technology would later be used on the world first commercial microprocessor.

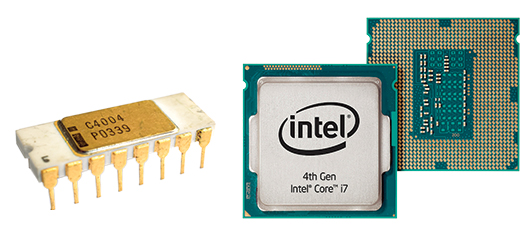

Left-to-Right: Intel 4004, Intel i7

In 1971, under the direction of Federico Faggin, Intel releases the Intel 4004, a 4-bit central processing unit, as the first commercially available microprocessor.

1972, the 8008 8-bit microprocessor is released by Intel. At this time Intel would remain as the leader in the manufacturing of the microprocessor.

In the mid-1970s, a new player would enter the microprocessor field, meet National Semiconductor. Now interested Intel’s advancement in the processor, National Semiconductor releases its own 16-bit processor. Unfortunately, the 16-bit processor would be short as the 32-bit processor is introduced.

As time progresses, we see the release of the 32-bit and 64-bit processor. Mainstream computers are now equipped with a 64-bit processor and Intel and AMD now dominate the field of chipset manufacturers. At the time of writing this article, the top performing CPU is the Intel Core i7 8700K with six cores running at 4.7GHz.

How are CPU manufactured today?

Over 1500 steps are involved in producing a modern processor and can take weeks to complete. Intel, Global Foundries and TSMC are leaders in manufacturing today’s microprocessors. Manufacturing takes place inside research labs classified as FS209E. Federal Standard 209E (FS209E) is referred to as class 1, the “cleanest” cleanroom. Class 9 would be considered the “dirtiest”.

The journey begins with electrical engineers mapping a circuit diagram. After a schematic diagram is approved the process of is move into the lab.

Silicon Ingot and Wafer Production

Quartz sand is heated to 1,800°C and purified to create monocrystalline silicon. This cylinder of silicon is moved to a room where it will be sliced into thin semiconductor material called wafers. These wafers will be the base of our circuit diagram.

At this point forward, it is extremely important to protect the wafer from being contaminated. Dust particles can cause multiple circuits to fail. As mentioned, microprocessors are manufactured in the large cleanroom where the air is purified. Wafers are placed into storage boxes when being transported and desiccator cabinets when not in transit.

A wafer goes through two polishing cycles to produce a smooth surface. A layer of photoresist, a light-sensitive material, is applied to the wafer. This coating is similar to the material used in film and screen printing.

The next step is to imprint the circuit diagram onto the wafer in a process called photolithography. A UV light is shown through a photomask projecting the diagram onto the wafer. Only parts of the photomask that are exposed will be imprinted onto the wafer. Since the wafer is generally large, hundreds of die can be imprinted on a single wafer.

After exposing the wafer to UV light, the wafer is washed in a solvent to dissolve the exposed areas leaving a pattern that another machine etches into the wafer. The etch is bombarded with ions, charged atoms that embed themselves into the silicon and changing how electricity is conducted.

Next, the transistors are connected together to make a functional die, made with tiny copper wires. These wires are embedded onto the wafer using a similar method as photolithography.

After testing the wafer for failed circuits, each circuit is cut from the wafer and placed into a CPU packet. This completes our journey of microprocessor manufacturing.

That wraps up our brief history and production of the microprocessor. Next time you’re scrolling through your news feed or watching a video on your device, take a moment to appreciate how far the microprocessor has come from its introduction of a 4-bit processor.